Hi, everybody. =)

I am Raen and I am going to

introduce the Memory-System-Architecture.

I will separate it into N topic there,

so your guys might be read/view it as easy as well.

First, it must be the introduction part…(I very

hate theory part which is very boring for me…T_T)

Ok, let’s begin the introduction.

Memory-System-Architecture

is used with the computer at 1960’s.

It’s very expensive and unreliable for the elements involve vacuum tubes, switching elements, mercury delay.

Not only

that, magnetic core memories also expensive and slow.

As we know that, memory

capacity can be measured in bytes.

Next, there have 8 different characteristics

of computer memory system as the below:

1) Location 5) Performance

2) Capacity 6) Physical type

3) Unit of transfer 7)

Physical characteristics

4) Access method 8) Organisation

Location of

memory (type of memory)

can be known as 3 parts: CPU, internal

and external.

From the left diagram, registers is

inside cpu and the internal memory location is involve main memory and cache.

Capacity is the understandable characteristic of memory. I think everyone already know what is meant of the capacity, right?

Ya, it’s meant the total amount of bits that can be stored in the memory.

For the internal memory, usually it will be

expressed in number of bytes or Words and the external memory, typically it

express to bytes only.

Unit of transfer

has 3 different types:

1) Internal- determined by data bus width,

may not equal to word.

2) External- Govern by block as it is

larger than a word.

3) Addressable Unit- The fundamental data element size that can be addressed in the memory.

Access Methods:

1)

Sequential Access

→Data does not have a unique address

→Must read all data items in sequence until the desired item is found

→Access times are highly variable

→Example: Tape Drive Units

2) Direct Access

ΘData items have unique addresses

ΘAccess is done with using a combination of moving to a general memory "area" followed by a sequential access to reach the desired data item

ΘExample: Disk Drives

3) Random

Access

# Each location has unique physical address

# Locations can be access in any order and all access times are the same

# "RAM" is more properly called read / write memory since this access technique apply to ROM as well

# Example: Main Memory

4) Associative Access

» A variation of random access memory

» Data items are accessed based on their

contents rather than their actual location

» Search all data items in parallel for a

match to a given search pattern

» All memory locations searched in parallel

without regard to the size of the memory

» Extremely fast for large memory sizes

» Cost per bit is 5-10 times that of a

“normal” RAM cell

» Example: cache.

Performance

3 performance parameters are used:

-Access time (latency)

≯Time between presenting the address and getting the valid

≯Data and store or made available for use

-Memory cycle time

≯Cycle time is access time + recovery time

-Transfer rate

≯Rate at which data can be moved into or out of memory

unit.

TN=TA + N/R

≯where TN = Average time to read or write N bits≯

TA = Average access time

≯

N = Number of bits

≯

R = transfer rate, in bits per second (bps)

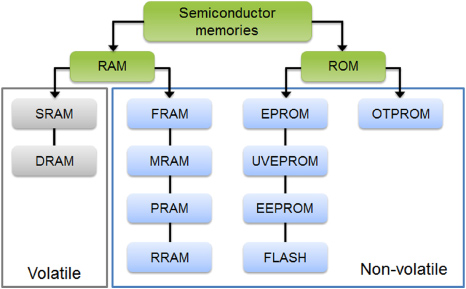

Physical Types & Characteristics

Typically, there is 3 physical types of memory:

-Semiconductor memory

eg. RAM, ROM

-Magnetic

eg. Disk & Tape

-Optical

eg. CD & DVD

And 4 different physical characteristic:

-Volatile memory (R/W Memory)

information decays or lost when power is switched off

-Non volatile memory

no electrical power is needed (Magnetic surface memories/ROM)

-Non-Erasable

-Power consumption

For the last characteristic is Organisation.

It's the physical arrangement of bits into words and there always not be used.

Finally... i have finish the part for the introduction.

hope your guys will understand my explanation.

See your guys in the next coming up post =)

thank you =D

Please kindly reply in comment there if you have any problem in this chapter.

0 comments: